I have tried to be as objective as possible. Disclaimer: NUnit user since 2005-06.

Legend:

- MSTest as an alias for the unit-testing fwk bunded with VS2010 v10.0.30319 throughout this post (although technically it is the just the runner). It's much easier to say than VisualStudio QualityTools UnitTestFramework. For NUnit, I'm using v2.5.9

- Class-Setup/Teardown - to be executed ONCE before/after ALL tests

- Test-Setup/Teardown - to be executed before/after EVERY test

Major Differences

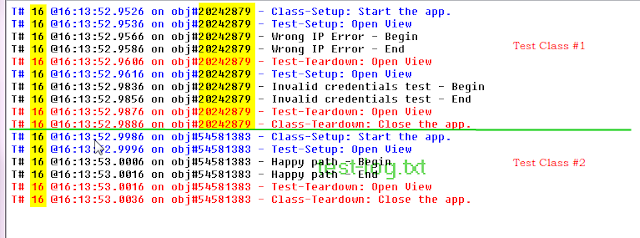

Migrating a test suite between the two runners might be non-trivial depending on your test code. MSTest and NUnit are very different in the way they go about running a set of tests. e.g. Consider a set of 2 classes containing tests - Class A with 2 tests, B with just one. If I insert logging at some critical points.. (I am looking at the default behavior - no customization) |

| NUnit |

Observations:

- Tests are run in sequence (no overlap)

- Single threaded : one thread runs all the tests

- All tests within a test class are run on the SAME instance 1

- xUnit order :

- for each test class

- Class setup

- for each test

- test setup

- test

- test teardown

- Class teardown

|

| MSTest |

Observations:

- Tests are run in sequence

- Multi-threaded even when tests are run sequentially : We can see 4 threads in this example (1 per test + 1 for class-teardowns)

- A NEW Instance of the test class is created PER TEST (see 3 diff hashcode values)

- MSTest order :

- for each test-class

- Class setup

- for each test

- test setup

- test

- test teardown

- Class cleanup for all test classes visited

What does all this mean ?

- MSTest differs from xUnit in its implementation of test class setup or teardown. They have to be static methods, which excludes setting up anything in instance variables to be used by subsequent tests. You can promote them to static (aka global) variables but combined with multiple-threads, be careful!

- MSTest does not provide deterministic test-class teardown i.e. no guarantees about when it will be run; just that it will be executed. So you need to design your tests to account for this - see what happened in the image above. Instead of Start-Close cycles, I see Start-Start-Close-Close!! I haven't yet figured out a workaround for this.. so if you know please post a comment.

Test-Class Inheritance

xUnit has some patterns that use inheritance to achieve the desired effect. So let's see they fare in this dept.

|

| NUnit : abstract fixture |

|

| MSTest : abstract fixture |

- the abstract fixture pattern - move common methods (e.g. common setup/teardown into a abstract base fixture)

- MSTest (right) won't execute Class-Setup/Teardown methods defined in base types. Test-Setup/Teardowns are treated differently. (so you may not be able to layer setup/teardown as shown above ). Also remember Class-Teardown is unpredictable.

- the abstract test pattern - all the tests are housed in an abstract class. You then subclass and override a method that provides the actual object to be tested. e.g. run 'contract tests' against multiple implementations of an interface.

- NUnit : You can cleanly extract a POCO abstract base type with test methods. MSTest: Additionally, you need to decorate the base type also with a TestClass attribute. (It won't show up in the TestView.) But can be done!

Parameterized Tests (Data driven)

I remember reading (when I first heard of TDD) that tests should be self-contained and shouldn't need to take inputs. Well, we've changed our mind. The need to execute the same test logic with different data sets (without duplication) led to RowTests. NUnit integrated a contributed extension and now there are TestCases.So let's test if how our client handles authentication.

[TestCase("WrongUser", "Don't care", TestName = "Invalid user")]

[TestCase("Gishu", "Wrong Password", TestName = "Wrong password")]

[TestCase("Gishu", "openSesame", TestName = "Right credentials")]

public void ThrowsExceptionIfCredentialsAreWrong(string username, string password)

{

var client = new Client();

var exception = Assert.Throws<ConnectFailedException>(

() => client.Connect("129.0.0.1", username, password));

Assert.That(exception, Has.Message.EqualTo("Invalid Credentials"));

}

I love TestNames vs anonymous inline data clumps!!

Lets switch to MSTest. No RowTests built-in. There is a patch but it is the bare minimum (and requires me to mess with the registry) ; more on that after the jump. You can't slot in inline data values - but you can pull in data from a CSV/Xml/DB. Hmm... seems like a Hammer to swat a fly. But I gave that a try too; HOW TO: Create a data driven test. So I use the wizard, more attributes appear. It works but...

Differences:

- Does not show me 4 distinct tests in the results (actually the total count circled is wrong..). You cannot name your tests.

- You need to pop up test details (another window) for each group to see which tests failed in the group.

- Even then, you can't see which inputs failed. Annoying.

Dynamic Input generation: But we're still not done, what if we want to compute the inputs using code?? Say hello to NUnit's TestCaseSource and ValueSource. You can write methods that generate test cases at run-time based on any arbitrary logic (with all the frills: names, tags/category, expectedResult/Exception).

Once again MSTest - Not Supported.

Parameterized Test Fixtures

Next the big guns.. you don't need them everyday. But when you do, NUnit allows you to really cut down duplication via Parameterized Fixtures and Generic Fixtures. e.g. consider the following convoluted example

[TestFixture(typeof(PhoneX), "Sam", Description = "Model X")]

[TestFixture(typeof(PhoneY), "Htc", Description = "Model Y")]

public class TestAPhone<TPhone> where TPhone : Phone, new()

{

private TPhone _phoneToBeTested;

public TestAPhone(string name)

{

_phoneToBeTested = new TPhone { Name = name };

}

[Test]

public void TestDialing()

{

Console.Out.WriteLine("a phone of type {0} with name {1}", typeof(TPhone), _phoneToBeTested.Name);

}

[Test]

public void TestBatteryLife()...

NUnit interprets that as 2 different test fixtures - It is parameterized.. "Sam" and "Htc" are parameters passed into the suite. It is generic too.. the 2 test fixture attributes caused the entire suite to be run against an object of PhoneX and PhoneY.MSTest: NOT SUPPORTED (or I haven't found it).

IDE-Integration

A big attraction with most MS offerings is integration; in this case, IDE integration. MSTest integrates out of the box. NUnit needs some assistance; but if you have something like Resharper (which you should), this isn't a deal-breaker.

Speed of Execution

- MSTest: This is peculiar. I used Ctrl+R, A to run all tests in solution. It takes 4-5 s to run 3 measly tests.. as compared to NUnit which runs an identical set in 0.1s . Around 4 secs elapse, before any test method's status changes - it seems it is doing some heavy lifting in "preparing" to run the tests.

- NUnit: I have NUnit setup to run all my tests when the binaries change. So a successful build triggers a test run. The faster I receive the test results, the less the possibility that I pursue a distraction.

Even in console mode, NUnit was faster. The cold start case takes much longer. However in subsequent runs too, NUnit took 3.5s as compared to 6s for MSTest

NUnit wins the speed test.

Assertion Library

NUnit has a much more evolved assertion library than MSTest. Don't have a detailed comparison here. Personally, I miss the constraint based Assert Model present in NUnit, Hamcrest, et.all, which I find more readable.

e.g. Assert.That(actualObject, meets_some_constraint)

Asserting on exceptions

|

| MSTest : Expected Exception not thrown! |

- MSTest: You can use this to state that the corresponding test should throw an exception of Type X. It also takes a message as a second parameter... which is the failure message (to be shown when the test fails) NOT the expected exception message. i.e. you can only check the type of exception.

- NUnit: You can check the type, the ExpectedMessage (you can even specify that the actual exception message must contain/start-with/exactly-match/match-a-regex), a custom handler to verify the exception and a failure message.

|

| NUnit : Expected Exception thrown but with incorrect message |

|

| NUnit |

public static T Throws<T>(Action codeblock) where T : Exception

{

try

{

codeblock();

}

catch(T exception)

{

return exception;

}

Assert.Fail("Did not throw an exception of type " + typeof(T));

return null;

}

Another nitpicky example would be the CollectionAssert implementation.

MSTest accepts ICollection for parameters instead of the more general IEnumerable. When you're comparing objects of IEnumerable

IEnumerable<int> enumerableX = new[] {1, 2, 3};

IEnumerable<int> enumerableY = new[] {1, 2, 4}.Concat(new[]{5});

CollectionAssert.AreEqual(enumerableX.ToList(), enumerableY.ToList(), "myError Message");

// Output:

// CollectionAssert.AreEqual failed. myError Message(Different number of elements.)

/*----------Equivalent NUnit error ---------*/

CollectionAssert.AreEqual(enumerableX, enumerableY, "myError Message");

//Output:

//NUnitTestsClient.ConnectTests.TestCollections:

// myError Message

// Expected is <...List`1[System.Int32]> with 3 elements, actual is <....List`1[System.Int32]> with 4 elements

// Values differ at index [2]

// Expected: 3

// But was: 4

Code Coverage

Yup. With MSTest, setting up code coverage is a snap. This is how you do it ; the trick is in locating the Configure button :)That said, getting code coverage for NUnit tests is just a bit more of effort. Create a batch file

vsinstr /coverage MyProduction.dll

vsperfcmd -start:coverage -output:WhatsThe.coverage #start profiler

[path to nunit-console-x86.exe] MyNUnitTests.dll #run tests

vsperfcmd -shutdown #stop profiler

vsperfcmd -start:coverage -output:WhatsThe.coverage #start profiler

[path to nunit-console-x86.exe] MyNUnitTests.dll #run tests

vsperfcmd -shutdown #stop profiler

That creates a coverage file that you can just open up in the IDE and you get the stats and the line highlighting et. all.

Test Impact

MSTest can point out unit tests that are impacted by a code change, on the basis of function call chains. There is some additional work to avail this benefit (configuration and collecting baselines)NUnit : not supported.

That said: it works only for unit tests; Personally I'd run all the unit tests but YMMV.. especially if it takes over 10-15 mins to run all of them.

It would have been a HUGE time-saver if it worked for acceptance tests. However at the system level, modules interact via DB, files, networks, etc even though there are no direct function calls ; so doesn't work.

Parallel execution

MSTest seems to be designed to enable executing unit tests in parallel. Here's the how-to.Note:

- I had to open-and-close my solution for the change to take effect.

- Also you can have either coverage or parallel execution (not both).

NUnit : not supported..yet. However there is PNUnit, an open-source mod. Also it seems likely that parallel execution would be built into the next big release of NUnit. Another approach would be running segments of your tests in parallel on multiple cores/machines.

Misc. Peeves

Some more tiny things that irked me with MSTest

Misc. Peeves

- No hierarchical view of test cases. Flat lists everywhere.

- Have to open a new "test details" window to see details of every test failure. e.g. console output.

- Adds supporting files to the solution (.vsmdi, .testsettings) etc.

- Cannot see console output for all tests in one place. (Useful for debugging).

- Categories can only be applied to tests. Tedious - applying the attribute in 15 places instead of once on top of the test-class. (Duplication). Filtering on categories is non-intuitive in the IDE. Try doing it without looking at the answer.

Summary (IMHO):

So NUnit is clearly ahead on Speed, Simplicity, Assertion library, Flexibility e.g. Parameterized (data-driven) tests and informative failures. It has had the benefit of time and shows it. If you're already using it, there is no compelling reason to switch.

MSTest has parallel execution & Test Impact as differentiators. However that is not enough to make up for its shortcomings as a unit testing framework. Less frequent releases (tied up with VS editions) and slower pace of evolution. It just has got a lot of small things wrong that make it difficult to like. If you're using TFS, then MSTest would be easier to work with than other unit testing frameworks for obvious reasons. (But then this is your smaller problem.. Sorry couldn't resist that one.)

1 You can configure the NUnit test assembly/class/method to run on a different thread via RequiresThread attribute↩

This was definitely a good informative read as I am in the process of choosing which product to use for a current application that I'm working on. Heck I couldn't really tell your nunit love until half way through the article which most people just can not do! Keep up the work!

ReplyDeleteThanks Ramone. With VS2011, Microsoft seems to be extending support to other test runners (NUnit being one of them). If this extends to code coverage, CI, etc., it'd be a pleasant surprise from the MS testing tools camp. http://blogs.msdn.com/b/visualstudioalm/archive/2012/03/02/visual-studio-11-beta-unit-testing-plugins-list.aspx

DeleteThis is out of date and most of it has changed with more recent version of VS and MS Test.

ReplyDeleteCan you be a bit more specific here ? I'm using MSTest regularly (workplace mandate) and it still feels the same to me.

Delete